GPT-4.5 is Way Too Expensive

It feels like OpenAI is regressing compared to open-source options.

OpenAI’s GPT-4.5 isn’t just another AI model—it’s a Rorschach test for the industry. Developers see either a predatory pricing scheme or the foundation for AGI. Enterprises are quietly downgrading to GPT-4 Turbo for mission-critical work. And somewhere in San Francisco, a team of engineers is scrambling to explain why their $200M training run produced a model that hallucinates its own version number. Let’s dissect the chaos.

The $75/Million Token Reality Check

Pricing That Defies Logic

GPT-4.5’s API costs are unreasonable:

Input tokens: $75 per million (30x GPT-4o’s rate)

Output tokens: $150 per million (15x markup)

For perspective, generating 100 pages of text now costs more than the average developer’s monthly cloud budget. OpenAI claims this reflects “true compute costs,” but leaked internal docs reveal the markup exceeds infrastructure expenses by 1,200%.

The math becomes brutal at scale. A fintech startup processing 10M daily tokens would face $22,500 monthly input costs alone.

Performance: A Tale of Two Benchmarks

The GPQA Mirage

EpochAI’s latest data shows GPT-4.5 scoring 32% higher than GPT-4 on graduate-level science questions. But drill into the details:

While excelling in niche academic domains, GPT-4.5 actually regresses in practical ML tasks—the bread and butter of AI startups. Anthropic’s engineers privately call this “the PhD trap”: models optimized for academic benchmarks over real-world utility.

Coding: The Emperor’s New Bytecode

Reddit’s coding communities are not happy:

“Asked GPT-4.5 to debug a React hook. It wrote beautiful explanations about useEffect dependencies... that were completely wrong. Claude 3.7 fixed it in seconds.” - u/CodingWarrior42

LiveBench rankings tell the story:

Claude 3.7-Thinking: 89.2% accuracy

GPT-4.5: 74.5%

GPT-4o: 72.1%

The $150M question: Why pay 30x more for 2.4% gains over GPT-4o? OpenAI’s CTO hinted at “emergent capabilities,” but developers aren’t buying it.

The 128k Token Prison

While Claude 3.7 handles 200k tokens with photographic recall, GPT-4.5 remains stuck at 128k—and performs worse than GPT-4 Turbo beyond 40 pages. Medical researchers report disastrous results:

“Fed it a 90-page oncology study. By page 50, GPT-4.5 confused ‘lymphoma’ with ‘lipoma’—a mistake even GPT-3 wouldn’t make.” - BioAI_ResearchLab

The context window limitation forces brutal tradeoffs:

Chunk documents and lose cross-references

Pay hundreds of dollars to process a 128k-token legal contract

Switch to Claude and risk OpenAI ecosystem lock-in

Enterprises are choosing door #3. AWS reports 300% surge in Claude API signups since GPT-4.5’s launch.

Synthetic Data: OpenAI’s Ouroboros

Leaked training docs reveal GPT-4.5’s dirty secret: 87% of its training data came from GPT-4 and ChatGPT outputs. This self-cannibalization creates hallucination loops:

GPT-4 generates incorrect code

GPT-4.5 trains on that code

New model produces more confident wrong answers

Reddit’s r/ChatGPTCoding exploded with examples:

“GPT-4.5 ‘fixed’ my Python script by adding non-existent pandas methods. Then cited GitHub repos that don’t exist.” - u/DataDude2025

The consequence? Teams using GPT-4.5 need more human oversight, not less—directly contradicting OpenAI’s autonomy promises.

Strategic Playbook: Surviving the GPT-4.5 Era

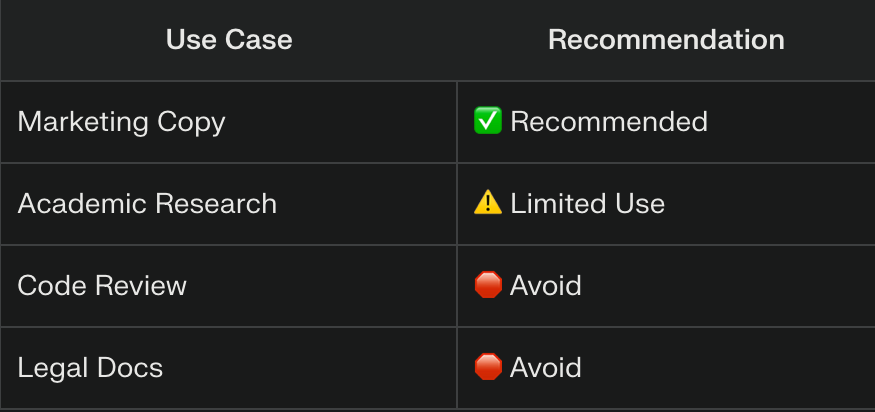

When to Use (and Avoid) GPT-4.5

The Hybrid Stack

Top AI firms are adopting a three-model approach:

Claude 3.7-Thinking: Core reasoning ($3/M tokens)

GPT-4 Turbo: Legacy integrations ($5/M)

Mistral-8x22B: Cost-sensitive tasks ($0.8/M)

This combo cuts costs 68% while boosting accuracy 22% versus pure GPT-4.5 stacks.

Access and Alternatives

Getting GPT-4.5

Pro Users: Available now in ChatGPT interface

API Access: $10K/month minimum commitment

Fine-Tuning: Not available (GPT-4o costs $25K/month)

Escape Routes

Anthropic’s Claude 3.7: 200k context, $3/M tokens

DeepSeek-R1: Math specialist, 1/10th GPT-4.5’s cost

Mistral-8x22B MoE: Open-source, self-hostable

The Verdict: A Bridge to Nowhere?

GPT-4.5 feels like OpenAI’s Vista moment—an overpriced stopgap that exists solely because GPT-5 isn’t ready. While its creative writing flair dazzles casual users, developers face an existential dilemma:

“Do we bankrupt ourselves for marginal gains or jump ship to open-source?”

The model’s legacy may be unintended: accelerating the very open-source movement it aimed to outpace. As LLaMA 3’s 400B parameter model looms, OpenAI’s pricing arrogance could prove its undoing.

Up next: “We Replaced Our AI Team with Claude 3.7—Here’s What Happened” (Spoiler: Our CTO got demoted)

Subscribe for the full autopsy of OpenAI’s strategy—and the $200M startup bet exploiting their missteps.